Understanding the Akaike Information Criterion: A Powerful Tool for Model Selection

Introduction

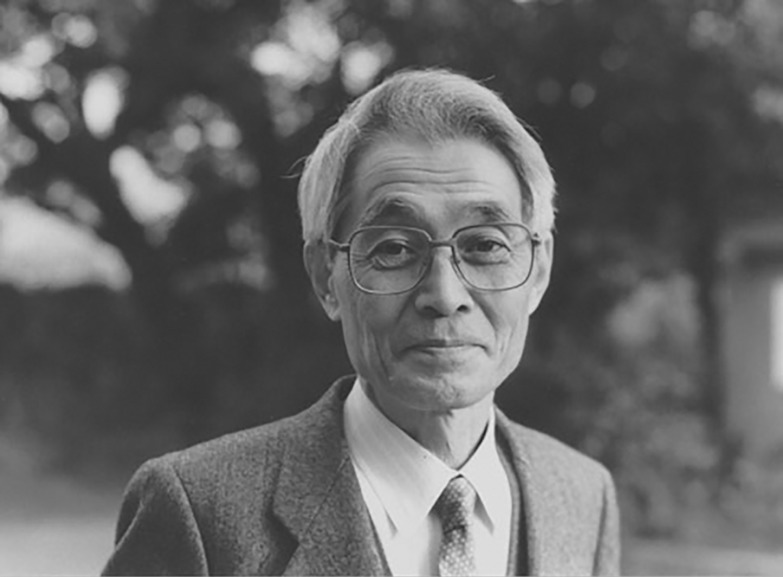

In the realm of statistical modeling and machine learning, choosing the best-fitting model is a crucial step in the analysis process. The Akaike Information Criterion (AIC), named after its creator Hirotugu Akaike, is a widely used metric designed to facilitate model selection by quantifying the trade-off between model complexity and goodness of fit. In this article, we will delve into the theory behind AIC, its mathematical formulation, and its practical applications in various fields.

The Concept of Model Selection

Model selection is an essential aspect of statistical analysis, particularly when dealing with complex data sets and various candidate models. The primary objective of model selection is to identify the model that best represents the underlying structure of the data while avoiding overfitting, where a model becomes excessively tailored to the training data and fails to generalize well to unseen data. Overfitting can lead to poor predictive performance and limited applicability in real-world scenarios.

The Akaike Information Criterion (AIC)

The Akaike Information Criterion was introduced by the Japanese statistician Hirotugu Akaike in 1973. It was developed as a measure of the relative quality of different models while simultaneously considering both the goodness of fit and model complexity. AIC provides a means to compare models with varying degrees of complexity and select the one that balances model fit and parsimony effectively.

Mathematical Formulation of AIC

The AIC value for a particular model is calculated based on the maximum likelihood estimation (MLE) and the number of parameters in the model. The formula for AIC is as follows:

AIC = -2 * log(L) + 2 * k

Where:

log(L) is the natural logarithm of the maximum likelihood estimation of the model.

k is the number of parameters in the model.

The AIC value is a trade-off between two opposing goals: minimizing the value of -2 * log(L) (maximizing the likelihood) and minimizing the value of 2 * k (penalizing the model for having more parameters).

Interpreting AIC Values

The AIC values are relative measures of model quality, meaning that comparing AIC values of different models allows us to identify the model with the lowest AIC as the most likely to have the best balance between goodness of fit and simplicity. A lower AIC value indicates a better-fitting model. When comparing two models, the model with a difference of AIC value greater than two is considered significantly better.

AIC in Model Selection

AIC has been widely adopted in various fields, including but not limited to:

5.1. Linear Regression: In linear regression, AIC helps identify the most appropriate combination of predictors that best explains the variation in the dependent variable.

5.2. Time Series Analysis: AIC is used to select the optimal ARIMA (AutoRegressive Integrated Moving Average) model for forecasting future values in time series data.

5.3. Machine Learning: AIC can be applied to choose the best model hyperparameters and features in machine learning algorithms.

5.4. Econometrics: AIC aids in selecting the most suitable econometric models to understand economic phenomena.

AIC and Overfitting

One of the primary advantages of AIC is its ability to penalize overly complex models. As a model becomes more complex by adding more parameters, the AIC value increases. Thus, AIC discourages the inclusion of unnecessary variables, mitigating the risk of overfitting and ensuring better generalization to new data.

AICc – A Correction for Small Sample Sizes

In situations where the sample size is relatively small compared to the number of parameters, AIC may tend to favor overly complex models. To address this issue, Akaike proposed a corrected version of AIC known as AICc:

AICc = AIC + (2 * k * (k + 1)) / (n – k – 1)

Where n is the sample size.

AICc adjusts for the bias introduced by the finite sample size, making it more reliable in scenarios with limited data points.

Conclusion

The Akaike Information Criterion (AIC) is a powerful tool in model selection, offering a balance between model fit and complexity. By penalizing overly complex models, AIC helps mitigate overfitting issues and ensures better generalization to unseen data. Researchers, statisticians, and data scientists from various disciplines rely on AIC to identify the best-fitting models for their data. As a fundamental concept in statistical modeling, AIC continues to play a central role in advancing data-driven research and decision-making processes.

Editing More than 200,000 Words a Day

Send us Your Manuscript to Further Your Publication.

Is ChatGPT Trustworthy? | Rovedar | Scoop.it says:

ChatGPT vs. Human Editor | Rovedar | Scoop.it says:

Enhancing Your Assignments with ChatGPT | Roved... says: