In a meta-analysis, the first step is to determine the kind of outcome(s), which is either binary or continuous. Relative risks, ORs, and hazard ratios, as well as the absolute risk difference and its inverse, are the most common dichotomous outcomes (ie, the number needed to treat). Mean differences and normalized mean differences are the most common continuous outcomes; the latter is employed when research uses different scales or units. Second, authors should specify whether the model has fixed or random effects. The latter is the most prevalent, and it is based on the assumption that effects vary among studies; the aim is to compute the average of those effects. The former is employed in some circumstances, such as when there is little effect heterogeneity or when outcomes are uncommon. The third step is to specify the mechanism for calculating effect measurements and associated confidence intervals (CIs). The type of outcome, effect measure, and model all play a role. The inverse variance approach is employed in the majority of circumstances. When there are dichotomous outcomes, rare events, and an imbalance in the number of individuals between trial arms, the Mantel-Haenszel method is used. The fourth stage is to select a tau estimator, which quantifies between-study variance; the Paule-Mandel or Sidik-Jonkman estimators are recommended over the DerSimonian and Laird estimators, especially when the number of studies is limited. When the number of studies is small, authors may choose to use the Hartung-Knapp approach to revise the CIs of intervention effects.

In various forms of meta-analyses, specific methodologies and effect measurements are used. For example, summary receiving-operating characteristic curves with 95 percent confidence intervals are calculated in meta-analyses of diagnostic studies, typically using bivariate or hierarchical approaches that account for the link between sensitivities and specificities. Pooled c-statistics are used as a measure of discrimination, and observed/expected ratios are used as a measure of calibration in prognostic meta-analyses.

Researchers have a hurdle when doing rare event meta-analyses, especially when the number of studies is small, they are imbalanced, or one or more arms have zero events. For this case, the Peto OR is a popular effect measure; however, other measures such as the Mantel-Haenszel or inverse variance can yield less biased estimates. To address the absence of events in one or more arms, approaches such as treatment arm continuity correction can be applied.

The presence or absence of statistical heterogeneity is determined by a 2 test, and the level of heterogeneity is measured by the inconsistency (I2) statistic (low, I2 30 percent -60 percent; moderate, I2 30 percent -60 percent; and high, I2 > 60 percent).

The 95 percent confidence intervals (CIs) for I2 figures must be reported.

The x-axis represents the study, while the y-axis represents the SE of the effect measure or the sample size per study. Because small studies are more prone to bias, they may go unreported with poor results or be published with favorable results. The Harbord test of asymmetry of the funnel plot can be used to assess small research effects statistically. Because there are other explanations, such as actual heterogeneity among study results, poor methodologic quality, reporting biases, and chance, funnel plot asymmetry should not be equated with publication bias. To execute small study effects testing, at least ten studies should be accessible.

Cumulative meta-analyses, subgroup analyses, meta-regression analyses, and sensitivity analyses are examples of secondary analyses. Cumulative meta-analyses calculate pooled effects by adding data from a second study to a previously published one, then adding data from a third study to the previous pooled effect of the first and second studies, and so on; figures show how adding one study at a time changes effect estimates and whether the pooled effect is maintained over time or diluted at a certain calendar year point.

Subgroup analyses divide studies into subgroups based on baseline characteristics and use test-for-interaction testing to see if the effects of those subgroups differ. In meta-analyses, subgroup analyses should only be used to generate hypotheses. Meta-regression analyses look at whether intervention effects are linked to study features at the start or summary patient characteristics at the end. The log of the intervention impact is usually employed as the dependent variable in linear regression studies. Sensitivity analyses assess secondary models (for example, fixed effects rather than random effects), methods (for example, Mantel-Haenszel rather than inverse variance), and effect measures (for example, absolute risk difference rather than relative risk); they also assess subsets of studies by excluding those that may explain high heterogeneity (eg, excluding studies with a high risk of bias).

Additional assumptions must be explained and addressed in RCT NMAs.Patient characteristics, interventions, controls, results, and intervention/follow-up dates are subjectively assessed for transitivity between trials; the more comparable they are, the more transitivity is met. A test of disagreement for each intervention comparison and the Cochran’s Q statistic for the whole network is used to assess consistency between direct and indirect effects. Using the surface under the cumulative ranking curve in Bayesian meta-analyses or p-scores in frequentist meta-analyses, multiple intervention comparisons can be summarized in league tables and ranked as best therapies. Finally, the geometry of networks per outcome is assessed in terms of the treatments used, the number of studies that included specific direct comparisons, and the number of patients and events in each comparison.

Reporting Considerations

The Preferred Reporting Items for Systematic Reviews and Meta-analysis (PRISMA) collaborative produced reporting guidelines for systematic reviews and meta-analyses. In 2009, the PRISMA declaration for systematic reviews and meta-analyses was released, replacing the Quality of Reporting of Meta-analyses standards from 1999. For systematic reviews and meta-analyses of prognostic factors or predictive models, there are no reporting guidelines.

PRISMA principles should be followed when reporting systematic reviews and meta-analyses. Authors should include a PRISMA checklist or a PRISMA extension checklist with their manuscript as a supplemental file.

A shortlist of questions for the researcher to consider

A shortlist of questions for the reviewer to consider

Consider making a comment on the following while reviewing a meta-analysis:

- Outcomes and clinical factors. Were the clinical variables and outcomes well-described and relevant to the study’s goal? Was there any risk of variability in the definitions and measures of clinical variables and outcomes?

- The studies that were included in the analysis.

- Was there a well-thought-out search strategy in place?

- Were there several different search engines used?

- Were suitable criteria for inclusion and exclusion used?

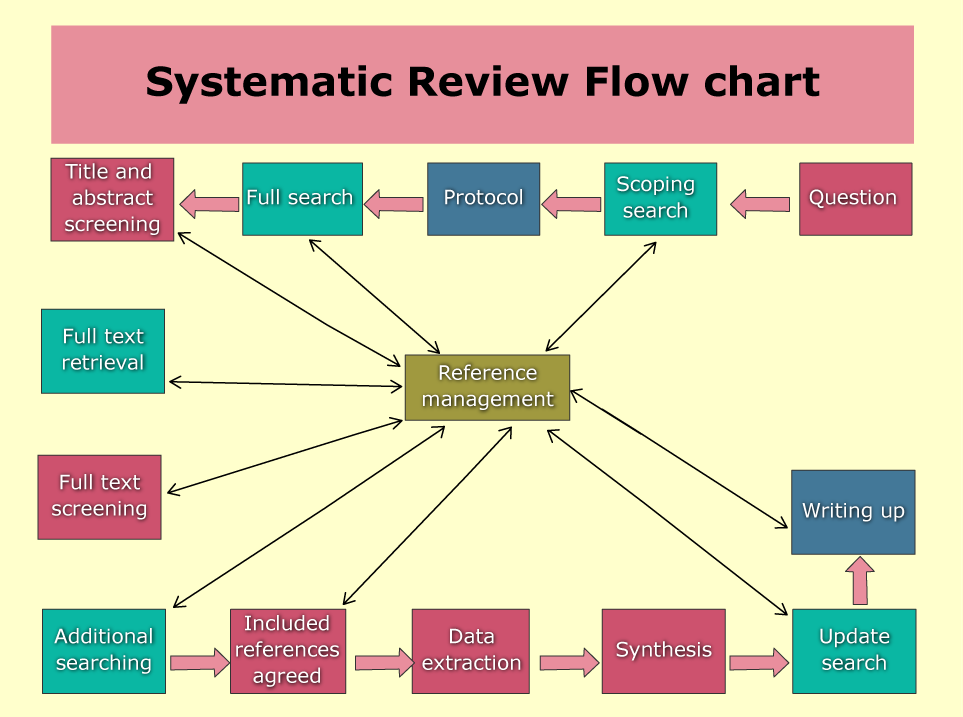

- Was there a flowchart of study selection?

- Was the possibility of publication bias considered?

3. The findings are analyzed and interpreted.

- Was the heterogeneity of the studies that were included assessed and reported?

- Was the evidence’s quality evaluated and reported (e.g., using GRADE methodology)?

- Was there a sensitivity analysis done?

- Were there any forest plots available?

- Were any limitations mentioned?

- Was the way the findings were interpreted reasonably?

Editing More than 200,000 Words a Day

Send us Your Manuscript to Further Your Publication.

Is ChatGPT Trustworthy? | Rovedar | Scoop.it says:

ChatGPT vs. Human Editor | Rovedar | Scoop.it says:

Enhancing Your Assignments with ChatGPT | Roved... says: